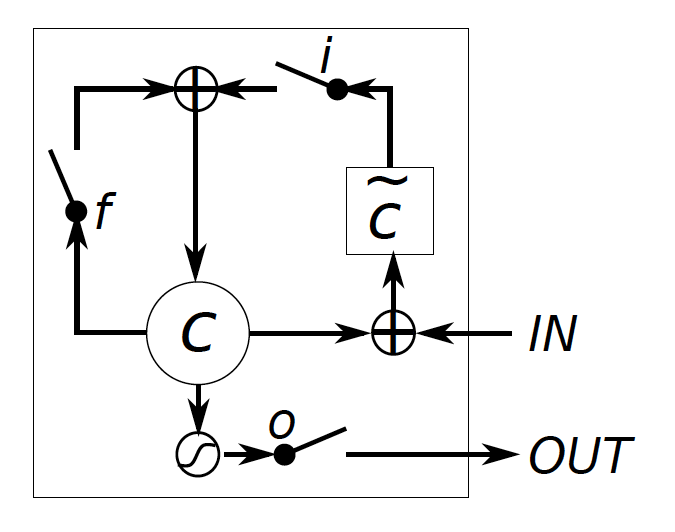

LSTM 计算较为复杂,参数也非常多,难以训练。GRU(Gated Recurrent Units)应运而生。

GRU的简化思想

|

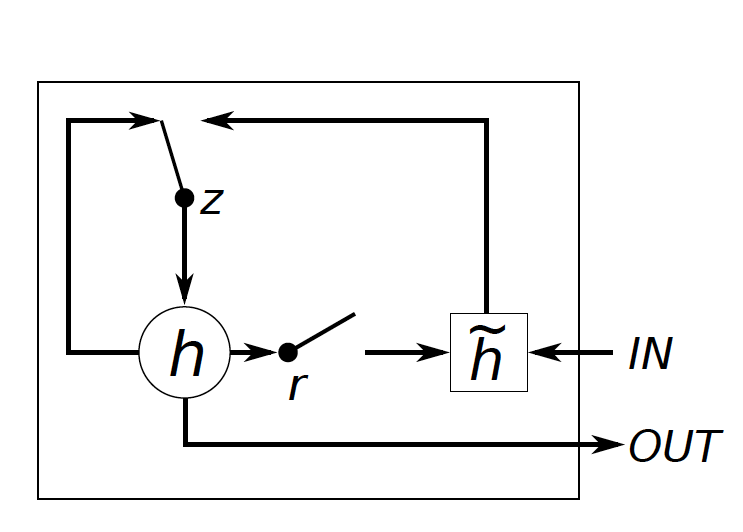

- 合并输入门i与遗忘门f: 合并为

update gate,即图中的z - 合并c和h: 合并为 h

- 新增重置门: 即图中的r

- 去掉输出门

看图,对比公式

- 对比LSTM中的 $j$ 和GRU中的 $\tilde{h}_{t}$ ,GRU中多了个重置门

- 对比LSTM中的 $c_ {t}$ 和GRU中的 $h_ {t}$ ,注意gate的耦合

i与f的合并

门控信号越接近1,代表”记忆“下来的数据越多;而越接近0则代表”遗忘“的越多。

Instead of separately deciding what to forget and what we

should add new information to, we make those decisions together. We only forget when we’re going to input something

in its place.

这里的遗忘 $z$ 和选择 $(1-z)$ 是联动的。也就是说,对于传递进来的维度信息,我们会进行选择性遗忘,则遗忘了多少权重 $z$,我们就会使用包含当前输入的 $h’$ 中所对应的权重进行弥补 $1-z$ 。以保持一种”恒定“状态。

二值化的更新门z,类似电路里的单刀双掷开关。

|  |

reset gate的作用

$r_t$用来控制需要 保留多少之前的记忆,如果$r_t$为0,那么h̃ t只包含当前词的信息。

reset gate实际上与他的名字有点不符。我们仅仅使用它来获得了 $h’$ 。

如果reset gate接近0,那么之前的隐藏层信息$h_{t-1}$就会丢弃,允许模型丢弃一些和未来无关的信息。

我觉得z也能起到丢弃$h_{t-1}$的作用,为什么非要加个r呢?

一般来说那些具有短距离依赖的单元reset gate比较活跃(如果$r_t$为1,而$z_t$为0 那么相当于变成了一个标准的RNN,能处理短距离依赖),具有长距离依赖的单元update gate比较活跃。

c和h的合并

$z_t$ 控制需要从前一时刻的隐藏层$h_{t−1}$中遗忘多少信息,

需要加入多少当前 时刻的隐藏层信息$\tilde{h}_t$,最后得到$h_t$。

直接得到最后输出的隐藏层信息,这里与LSTM的区别是GRU中没有output gate:

$$

h_t=z_t\circ h_{t-1} + (1-z_t)\circ \tilde{h}_t

$$

疑问环节

问题一

LSTM中也有gate,为什么GRU的命名里更强调gated这个词?

是不是因为想摆脱与LSTM的关系,强调与RNN的关系?比如

问题二

看电路图,或者公式。$h_{t-1}$ 要依次经过$z$和$r$两道开关,这样的信息流设计思想是什么?只用一个$z$有什么毛病吗,即r恒为1会怎样?

问题三

如何通过开关,实现长时间记忆、短时间记忆?

长记忆: r=z=0,实现长依赖,即简图中z打向左边。实现了信息的长久保持,但问题是,缺少了输出门,不能控制输出啊

若$z_t$接近1相当于我们之前把之前的隐藏层信息拷贝到当前时刻,可以学习长距离依赖。

并不是这样吧,能不能长距离依赖,要同时取决于z和r吧。

短依赖:

应用实例

- 百度的Deep speech2

GRU的实现源码

GRU-tensorflow

1 |

|

参考

- Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation, 2014

- Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling

- Evolution: from vanilla RNN to GRU & LSTMs

- Understanding LSTM Networks | colah

- CS224d