模型简介

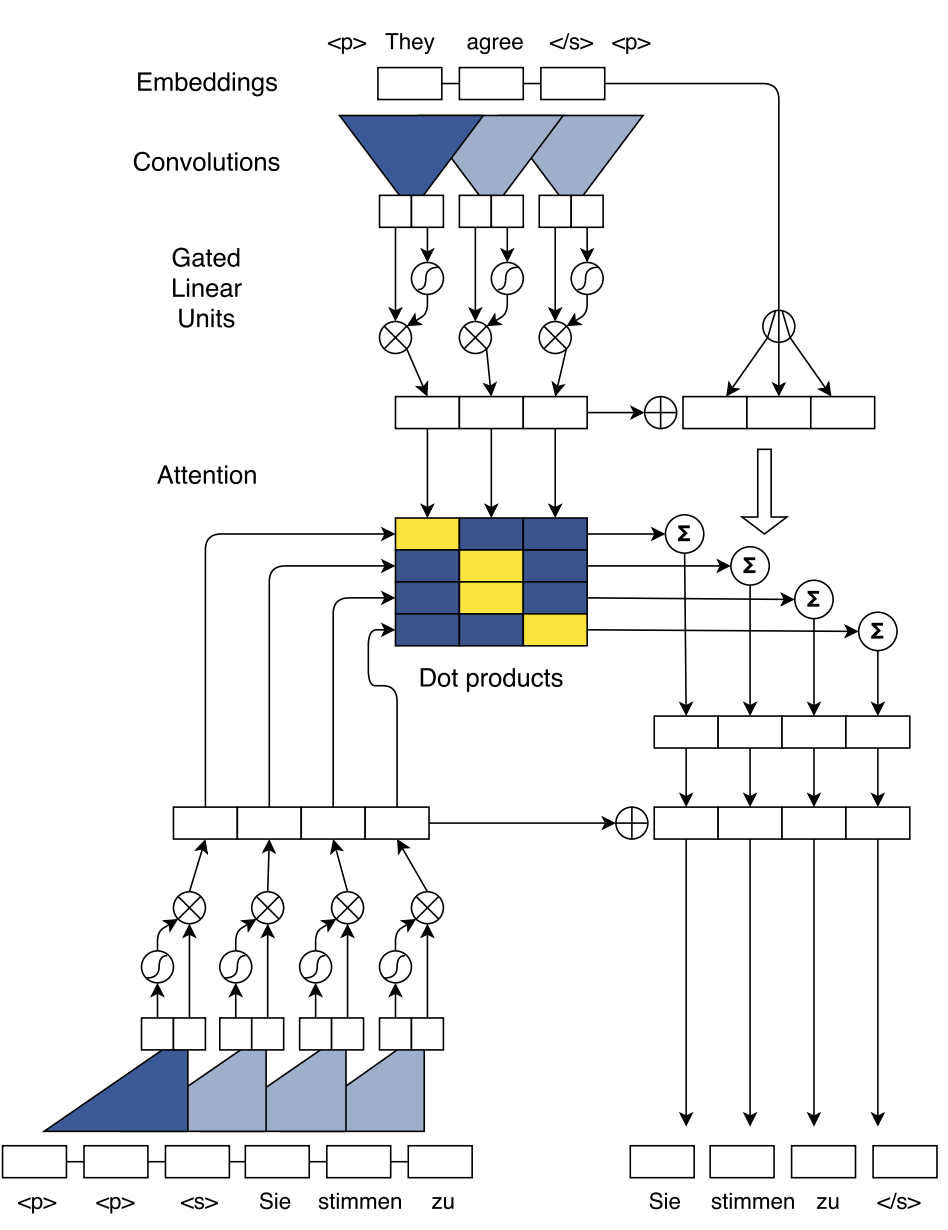

convseq2seq 架构

注意: 这里的attention是同时得到的,RNN中的attention则是依次得到的。

卷积过程

采用的全卷积网络(没有RNN)

Position Embedding: 给CNN更多的“位置感”Gated Linear Units: 给CNN的输出加gateResidual Connection: 给CNN都加Multi-step Attention: 一层attention不够那就上叠加的attention

Implementation/实现细节

Fairseq features:

- multi-GPU (distributed) training on one machine or across multiple machines

- fast beam search generation on both CPU and GPU

- large mini-batch training (even on a single GPU) via delayed updates

- fast half-precision floating point (FP16) training

code

- fairseq - torch 停止更新

- fairseq - pytorch 及时更新

paper

- A Convolutional Encoder Model for Neural Machine Translation 2016

- Convolutional Sequence to Sequence Learning 2017

扩展阅读

- blog | facebook

- https://www.zhihu.com/question/59645329/answer/167704376